In a frenzied race against a looming carbon time bomb, tech behemoths are grappling with the environmental ramifications of their sprawling data centers worldwide. These data centers, essential for powering today’s digital infrastructure, have emerged as greedy consumers of energy, particularly as the demand for artificial intelligence (AI) continues to skyrocket.

As AI becomes increasingly integral to various industries, the energy demands of data centers are exploding. This, in turn, calls for urgent action to mitigate their massive environmental footprint.

AI’s Energy Appetite: Unleashing Data Center Emissions

Between 2010 and 2018, there was an estimated 550% increase globally in the number of data center workloads and computing instances.

Data centers and transmission networks collectively contribute up to 1.5% of global energy consumption. They emit a volume of carbon dioxide comparable to Brazil’s annual output.

Hyperscalers like Google, Microsoft, and Amazon have committed to ambitious climate goals, aiming to decarbonize their operations. Hyperscalers are large-scale, highly optimized, and efficient facilities.

However, the proliferation of AI poses a huge challenge to these objectives. The energy-intensive nature of graphics processing units (GPUs), essential for AI model training, magnifies the strain on energy resources.

According to the International Energy Agency (IEA), training a single AI model consumes more power than 100 households in a year.

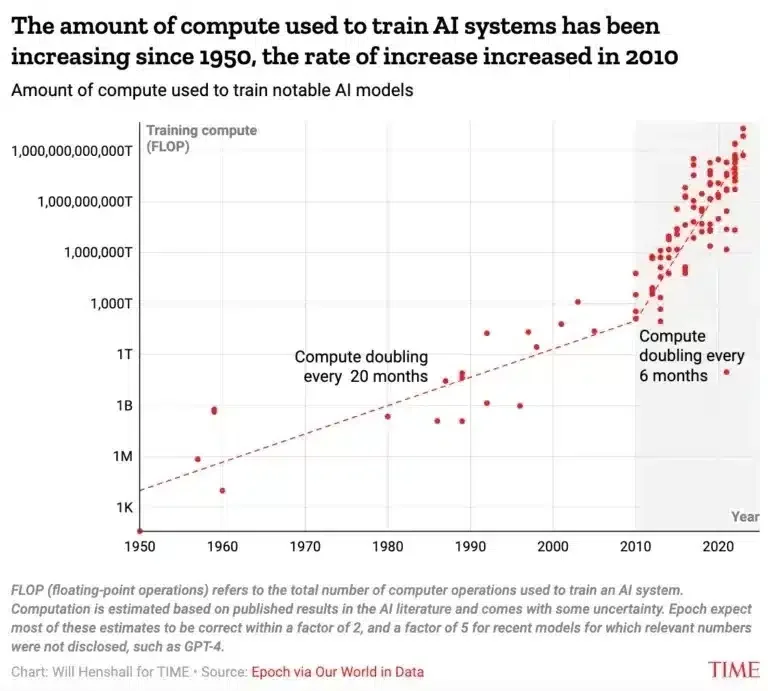

Per another source, the amount of computing power needed for AI training is doubling every 6 months. Fifty years ago, that happened every 20 months, as seen in the chart below.

More alarmingly, in just over a decade, the computing power used for AI model development has increased by a staggering factor of 10 billion. And it would not slow down.

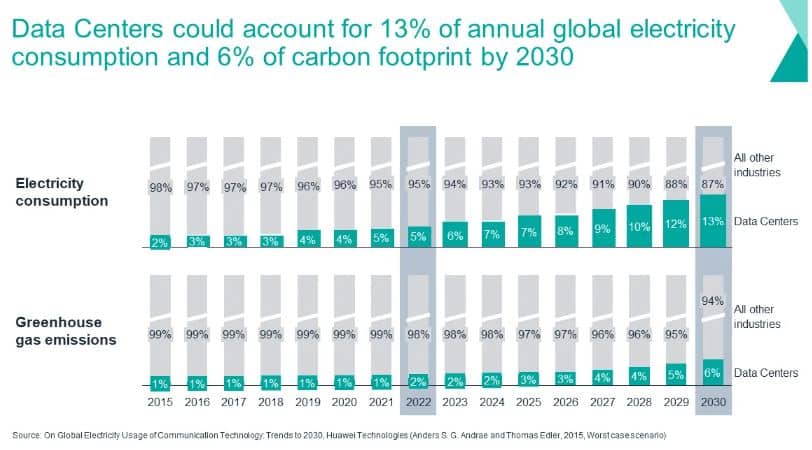

Industry estimates forecast that power use can go up to 13% by 2030 while the share of global carbon emissions would be 6% for the same year.

The Cost of AI: Balancing Power and Progress

The climate risks posed by AI-driven computing are profound, with Nvidia CEO Jensen Huang highlighting AI’s significant energy requirements. Jensen projected a doubling of data center costs within 5 years to accommodate the expanding AI ecosystem.

For instance, compute costs for training advanced AI models like GPT-3, boasting 175B parameters, and potentially GPT-4 are predictably substantial. The final training run of GPT-3 is estimated to have ranged from $500,000 to $4.6 million.

Training GPT-4 could have incurred costs in the vicinity of $50 million. However, when factoring in the compute required for trial and error before the final training run, the overall training cost likely exceeds $100 million.

On average, large-scale AI models consume approximately 100x more compute resources than other contemporary AI models. If the trend of increasing model sizes continues at its current pace, some estimates project compute costs to surpass the entire GDP of the United States by 2037.

According to computer scientist Kate Saenko, the development of GPT-3 emitted over 550 tons of CO2 and consumed 1,287 MW hours of electricity. In other words, these emissions are equivalent to those generated by a single individual taking 550 roundtrip flights between New York and San Francisco.

Not to mention that such figures account for the emissions directly associated with developing or preparing the AI for use. Other sources of emissions are not included.

Solutions to Reduce Data Center Carbon Footprints

To mitigate data center emissions, industry players have pursued various strategies, including investing in renewable energy and using carbon credits.

While these initiatives have yielded some progress, the escalating adoption of AI requires additional measures to achieve meaningful emission reductions.

Google’s load-shifting strategy exemplifies a promising approach to addressing this challenge. It synchronizes data center operations with renewable energy availability on an hourly basis.

By deploying sophisticated software algorithms, Google identifies regions with surplus solar and wind energy on the grid and strategically ramps up data center operations in these areas.

- The logic behind the approach is simple: Reduce emissions by upending the way data centers work.

The tech giant has also initiated the first initiative to align the power consumption of certain data centers with zero-carbon sources on an hourly basis. The goal is to power the machines with clean energy 24/7.

Google’s data centers are powered by carbon-free energy approximately 64% of the time, with 13 regional sites achieving an 85% reliance on such sources and seven sites globally surpassing the 90% mark, according to Michael Terrell, who spearheads Google’s 24/7 carbon-free energy strategy.

Cirrus Nexus actively monitors global power grids to identify regions with abundant renewable energy. Then they strategically allocates computing loads to minimize carbon emissions. By leveraging renewable energy sources and optimizing data center operations, significant reductions in carbon emissions were achieved.

The company was able to cut computing emissions for some workloads and the clients by 34%. It uses cloud services offered by Amazon, Microsoft, and Google.

Navigating the AI-Driven Energy Crisis

In recent years, both Google and Amazon have experimented with adjusting data center usage patterns. They do it both for their internal operations and clients using their cloud services.

Nvidia offers another solution to this AI-driven power crisis – green computing accelerated analytics technology. It can slash computing cost and carbon footprints by up to 80%.

Implementing load shifting necessitates collaboration between data center operators, utilities, and grid operators to mitigate potential grid disruptions. Still, this strategy holds immense promise in advancing sustainability goals within the data center industry.

As the demand for AI soars, addressing the energy requirements of data centers is paramount to mitigating carbon emissions. Innovative strategies such as load shifting offer a pathway towards achieving carbon neutrality while ensuring the reliability and efficiency of data center operations in an increasingly AI-driven landscape.