Following Nvidia’s first-quarter earnings report, the company’s CEO Jensen Huang emphasized that it is facing overwhelming demand rather than a lull. This happens as the company transitions from its Hopper AI platform to the more advanced Blackwell system. Huang dismissed concerns about a potential slowdown in demand, stating,

“People want to deploy these data centers right now. They want to put our [graphics processing units] to work right now and start making money and start saving money. And so that demand is just so strong.”

Surpassing Expectations with Stellar Q1 Results

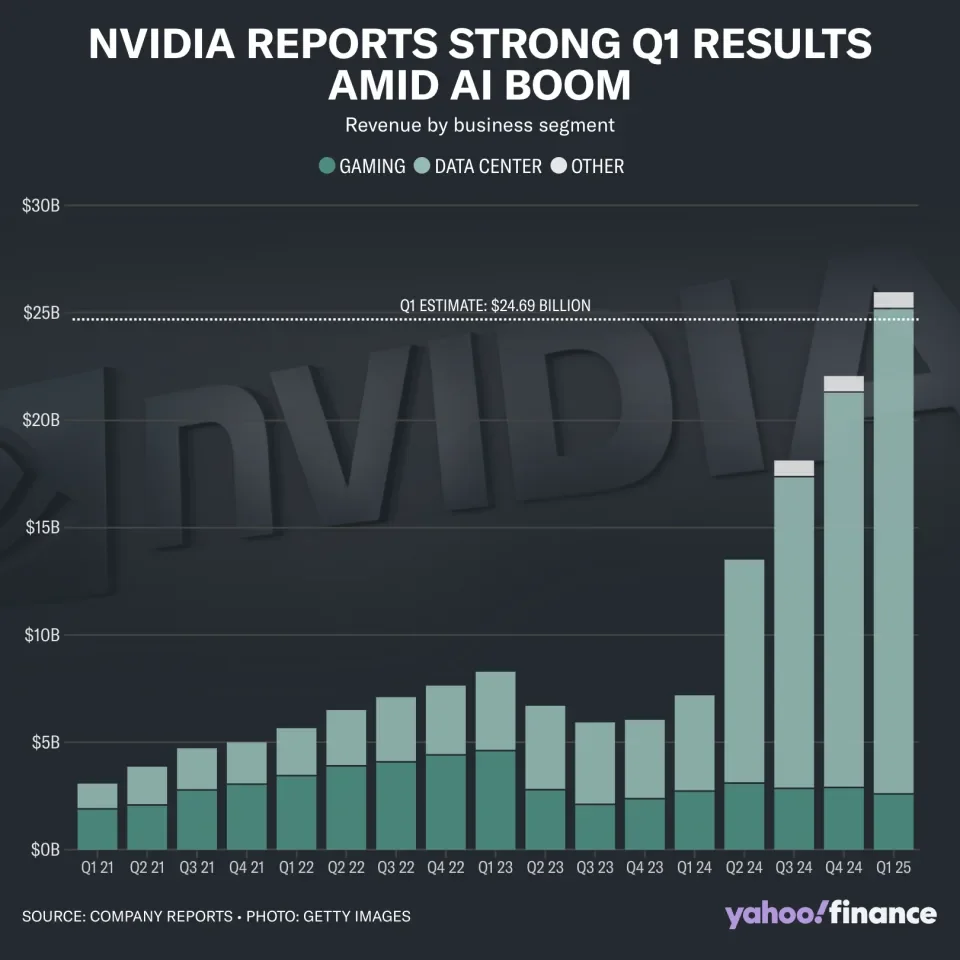

For the first quarter, Nvidia reported stellar results. Adjusted earnings per share reached $6.12 on revenue of $26 billion, representing year-over-year increases of 461% and 262%, respectively. Non-GAAP operating income was $18.1 billion for the quarter.

Nvidia expects its revenue for the current quarter to be around $28 billion, plus or minus 2%, surpassing analysts’ expectations of $26.6 billion.

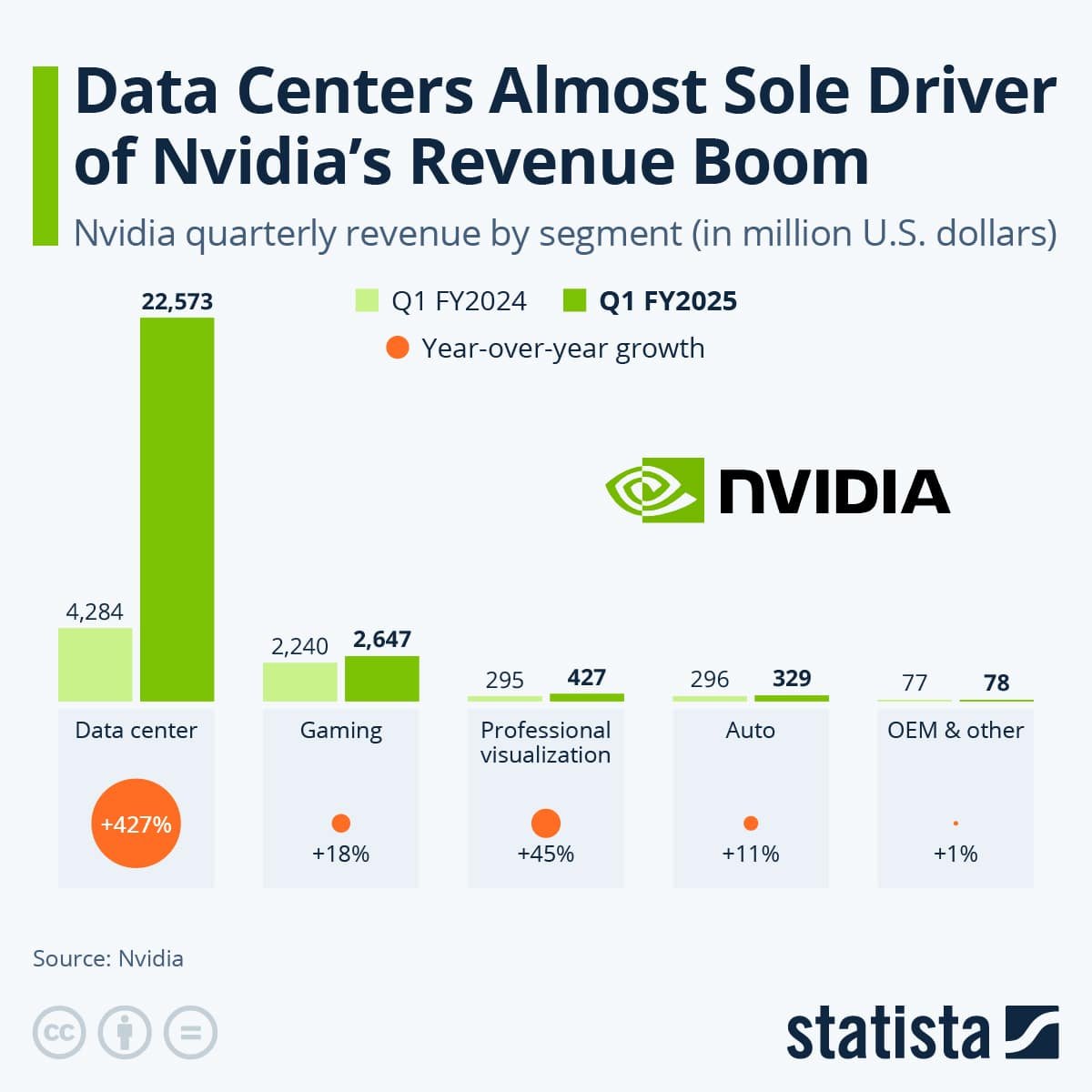

Nvidia’s data center segment, crucial for AI and reliant on high-powered server farms, generated $22.6 billion or 87% of its revenue between February and April 2024. Other segments also saw growth, with gaming and visualization solutions increasing by 18% and 45%, respectively, compared to fiscal Q1 2024. However, the data center segment’s growth was extraordinary, surging 427% year-over-year, as seen below.

In addition, Nvidia announced a 10-to-1 stock split, effective June 10 for shareholders as of June 7, and increased its quarterly dividend to $0.10 per share, up from $0.04. Following the earnings report, Nvidia’s stock rose by as much as 6% in extended trading.

Moreover, Huang highlighted the growing customer base for Nvidia chips beyond the major cloud service providers, mentioning companies like Meta, Tesla, and various pharmaceutical firms. He specifically pointed out the automotive industry as a significant user of Nvidia’s data-center chips.

Setting New Standards in Energy Efficiency

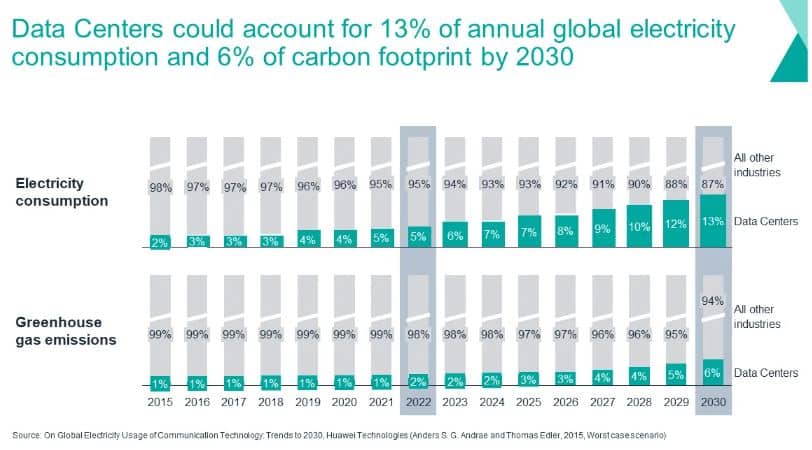

Nearly 75% of global carbon emissions stem from the production and consumption of energy, primarily due to the burning of fossil fuels for electricity. Data centers, which currently consume 460 terawatt-hours of electricity annually, contribute about 2% to this total. However, this share is expected to nearly triple to 6% by 2030 as data centers continue to expand.

Improving energy efficiency in data centers is crucial for reducing their carbon footprint and mitigating their environmental impact. By adopting more efficient technologies and practices, data centers can play a significant role in lowering overall carbon emissions.

Nvidia aims to address growing concerns about AI’s monetary cost and carbon footprint by highlighting Blackwell’s energy efficiency.

One expert at Microsoft has suggested that the Nvidia H100s currently in deployment will consume as much power as the entire city of Phoenix by the end of this year. What’s noteworthy about the new Blackwell GPU is its power efficiency, which Nvidia is now highlighting as a key selling point.

Traditionally, more powerful chips have also required more energy, and Nvidia focused primarily on raw performance rather than energy efficiency. However, when unveiling the Blackwell, CEO Jensen Huang emphasized its superior processing speed, which significantly reduces power consumption during training compared to the H100 and earlier A100 chips.

Huang noted that training ultra-large AI models with 2,000 Blackwell GPUs would consume 4 megawatts of power over 90 days, whereas using 8,000 older GPUs for the same task would consume 15 megawatts. This reduction translates to the power consumption of 8,000 homes compared to 30,000 homes.

Tech Titans Drive Nvidia’s AI Dominance

Undeniably, Nvidia stands at the forefront of the exploding demand for AI applications, driven by major tech giants like Tesla, Meta, Microsoft, and Alphabet. These companies’ recent management commentary underscores the significant potential for Nvidia’s business expansion in the AI sector.

Tesla’s ambitious plans to increase its Nvidia chip use by 140% highlight the critical role of Nvidia’s GPUs in training AI models for its full self-driving capabilities and upcoming robotaxi launch. This substantial investment represents a major endorsement of Nvidia’s technology by Tesla CEO Elon Musk.

Similarly, Meta’s aggressive spending to bolster its AI infrastructure aligns with CEO Mark Zuckerberg’s vision of establishing Meta as a leading AI company globally. As Meta continues to develop its large language model (LLaMA) and Meta AI chatbot, Nvidia’s chips remain integral to its AI training efforts.

Microsoft is also experiencing surging demand for AI, outstripping its available capacity. The tech giant is investing in its own AI development using OpenAI’s GPT model. However, it plans to ramp up spending to meet the growing demand, with Nvidia’s chips playing a crucial role in its cloud service offerings.

Finally, Alphabet’s substantial capital expenditures in the first quarter, primarily directed towards Google Cloud and advanced AI models, further validate the importance of Nvidia’s technology in powering AI-driven initiatives. While Alphabet uses its chip designs for certain AI tasks, it continues to rely on Nvidia chips to meet its cloud customers’ needs.

While most AI runs on renewable energy, concerns persist about water consumption for data center cooling. As AI adoption grows, renewable energy demand could outpace supply, prompting interest in expediting nuclear plant approvals, notably by Microsoft.

Overall, the overwhelming demand for AI compute presents a significant opportunity for Nvidia, reflected in its robust financial performance and soaring gross margins. And with the company’s discussion of the Blackwell GPU’s energy efficiency, it signals that the company is starting to consider AI’s sustainability.